Article · 6 minute read

By Hannah Mullaney – 28th April 2022

“It’s just what they think of themselves.”

“Others might say differently.”

“They’ll just say what they think you want to hear.”

Sound familiar? These are common views associated with ‘self-report’, but they actually reflect the myths and misconceptions that we will tackle in this short article.

We learned a lot about the value of self-report during the two years we spent developing Wave®, our market-leading personality questionnaire, and will share some practical tips around how to ensure data from all self-report measures, including interviews, are optimized for effectiveness.

EX encompasses all employee touch points and interactions with colleagues, processes, procedures, policies, the culture they are working in and the job they are doing. It is about proactively shaping what happens to someone at work to drive their engagement.

Organizations need a solid foundation to build a stronger EX; one that is rooted in understanding their current situation. Capturing this data with a continuous listening strategy is key to shaping high performance as it helps present:

There is strong evidence that interviews, when done properly, do a very good job of predicting workplace performance. The same can be said for personality questionnaires. In fact, the evidence suggests that both of these things are better at predicting job performance than observational techniques that are used as assessment center exercises.

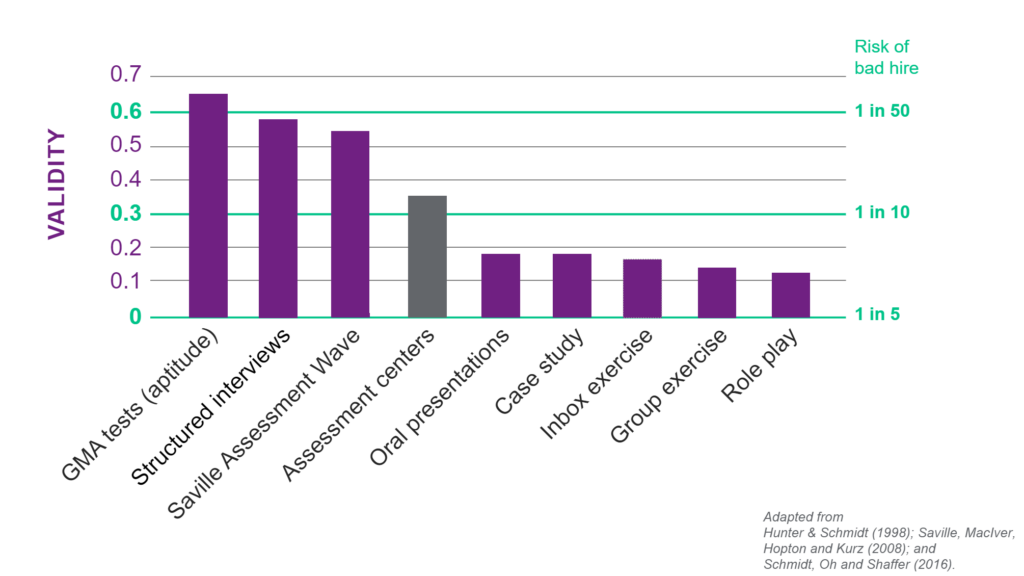

The graph below summarizes how effective different assessment methodologies are at predicting workplace performance. As we have already mentioned, aptitude tests lead the field. Structured interviews and Wave follow close behind.

Assessment center exercises in comparison do very poorly and struggle to even reach the industry standard of a 0.3 validity. A tool’s validity determines its effectiveness; the lower the validity score, the greater the risk of making the wrong selection decision and hiring a poor performer.

With no validity (a score of 0), the chances of making a poor hire are 1/5. Bring in an assessment which meets the industry standard for validity (0.3) and you reduce this to 1/10. But use a tool with a validity score of 0.6 and you reduce this to 1/50.

The relationship between validity and getting the right people, rather than the wrong people, is exponential. To maximize your hit rate, you have to climb up the validity graph and use the assessment methods proven to be most effective.

*Includes all assessment methods generally deemed acceptable for use in hiring across different occupations

*Includes all assessment methods generally deemed acceptable for use in hiring across different occupations

We are often asked why Wave also has such strong validity. The answer is actually very simple; it is all in the questions. One of the main lessons we took from developing Wave is that exact wording of questions is critical and small differences really do matter; the slightest change can impact on a question’s ability to predict performance.

There are 216 questions in the Wave Professional Styles questionnaire. We started with over 4000 questions, carefully crafted by a team of four highly-skilled psychometricians, who between them had over 100 years’ experience. We selected the very best of those questions based on how well they targeted the key behavior and then used data to identify those most effective at predicting performance.

Have a look at the list of questions below and decide whether you think they are ‘good’ or ‘bad’ in terms of effectiveness at predicting performance at work. They would usually be presented in a way that meant you had to state the extent to which you agreed or disagreed with each item.

Click on the + icon to reveal the answers.

Bad item. The problem here is the word random. Random ideas are often going to be irrelevant and so the question isn’t going to do particularly well at telling you how effective someone is likely to be at creating innovation at work. Simply removing the word random makes this a much stronger item.

Bad item. The problem here is that it isn’t an active behavior. You might have concern but not actually do anything about it. A better item is “I show empathy for others”.

Bad item. This is problematic because the word bold has multiple meanings; in England it means courageous, however in Ireland it means naughty. As soon as you bring in ambiguity, you lose effectiveness.

Bad item. This is one you could disagree with because you believe you never make mistakes, when in disagreeing to it, the questionnaire assumes you always make mistakes. This is bringing in complexity and adding to the cognitive load of the assessment, which means you end up measuring cognitive skills rather than behavior.

Bad item. Double negatives again significantly increase cognitive load. Simplicity is key.

Bad item. This one has translation issues and in some languages is translated to roughly “I do things by feeling my stomach”.

Bad item. We want to avoid ‘and’ because this means what you are getting at is much less targeted. It is also possible to be quiet but not reserved and reserved but not quiet.

In summary, when it comes to self-report in terms of interviews or personality questionnaires, the following will optimize effectiveness:

If you would like help to ensure your self-report measures are as effective as they can be, please do get in touch.

I also explore a little more of the science behind good question construction within personality assessments in a recent webinar “Leveraging Wave for Better Hiring” and you can listen to the recording.

I have focused mainly on the use of self-report in recruitment. Further insight on using self-report tools in a development context is coming; make sure you are on our mailing list for updates.

© 2024 Saville Assessment. All rights reserved.